Dataflows are a powerful tool for importing large amounts of data from numerous data sources into Dataverse. Being such a powerful tool, certain steps need to be followed to ensure things like lookups and datatypes are handled correctly. The process I use is a very conservative approach as I like to ensure data is correct throughout the process. This guide will be broken down into three parts.

Creating Dataverse table from Single CSV

Creating a Dataverse table from a csv is a method that can be used, though not one I would use on a frequent basis. I would have the csv file saved in a SharePoint Library to showcase how to manage a file that is stored there.

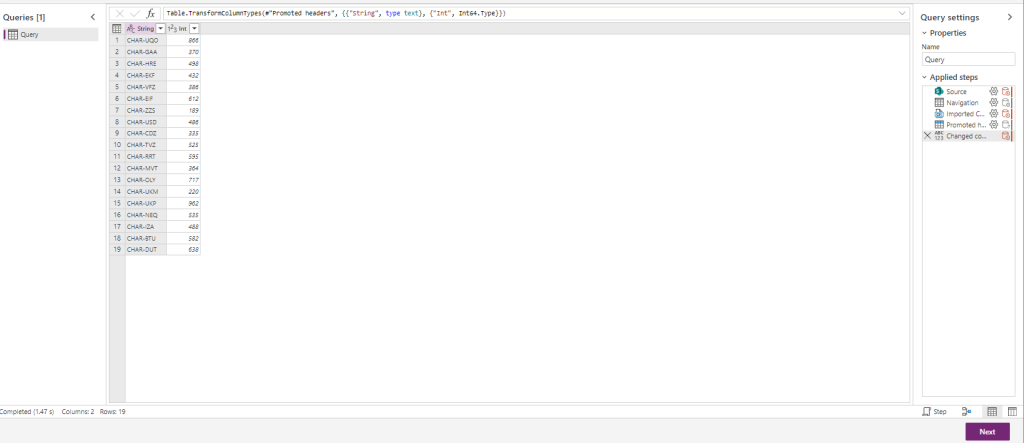

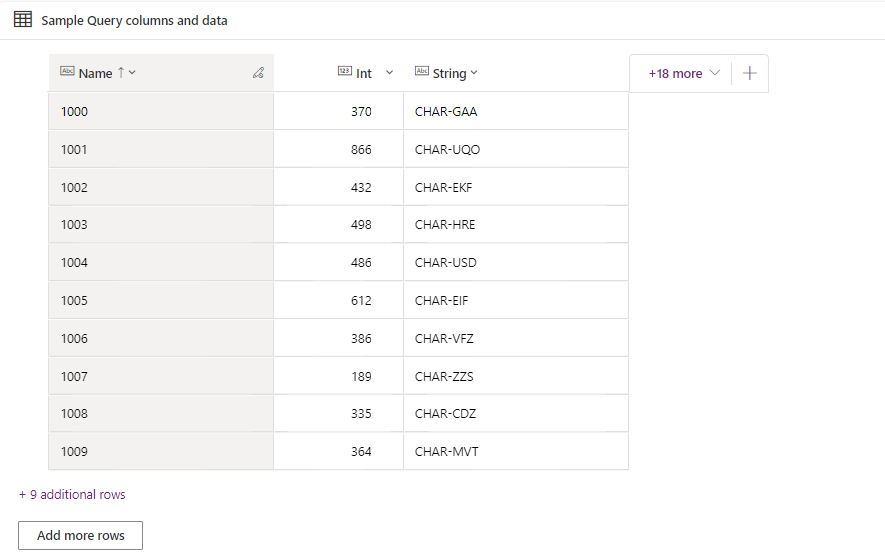

The CSV I would have 20 rows with some random strings and int in it.

| String | Int |

|---|---|

| CHAR-UQO | 866 |

| CHAR-GAA | 370 |

| CHAR-HRE | 498 |

| CHAR-EKF | 432 |

| CHAR-VFZ | 386 |

| CHAR-EIF | 612 |

| CHAR-ZZS | 189 |

| CHAR-USD | 486 |

| CHAR-CDZ | 335 |

| CHAR-TVZ | 525 |

| CHAR-RRT | 595 |

| CHAR-MVT | 364 |

| CHAR-OLY | 717 |

| CHAR-UKM | 220 |

| CHAR-UKP | 962 |

| CHAR-NEQ | 535 |

| CHAR-IZA | 488 |

| CHAR-BTU | 582 |

| CHAR-DUT | 638 |

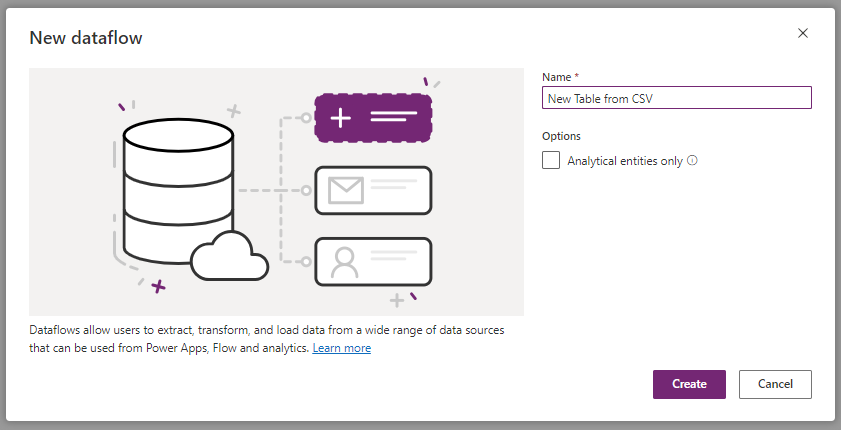

Step one would be to go into the maker portal and create a New Datflow called New Table from CSV

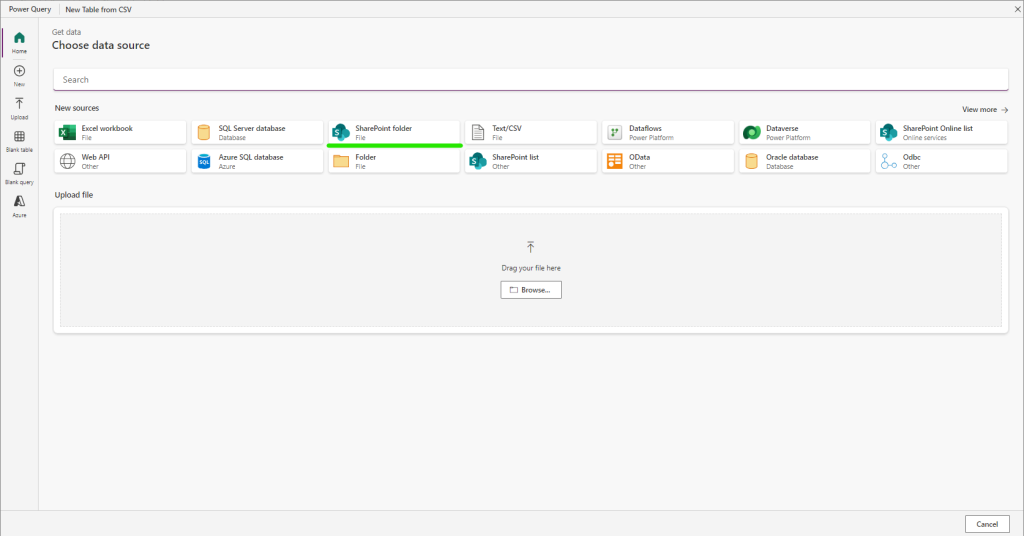

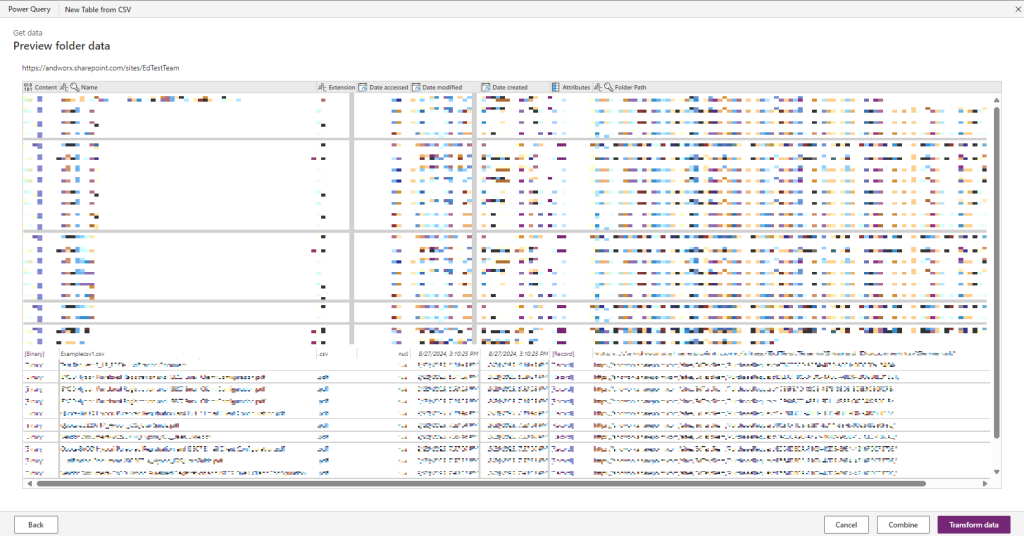

Then select SharePoint folder

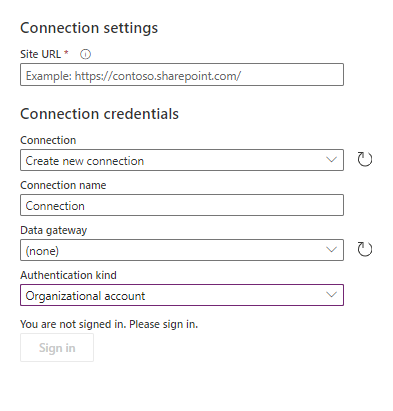

Enter in the URL for the site and Authentication Type to Organizational account and then Sign in

Once the connection is made, press Next.

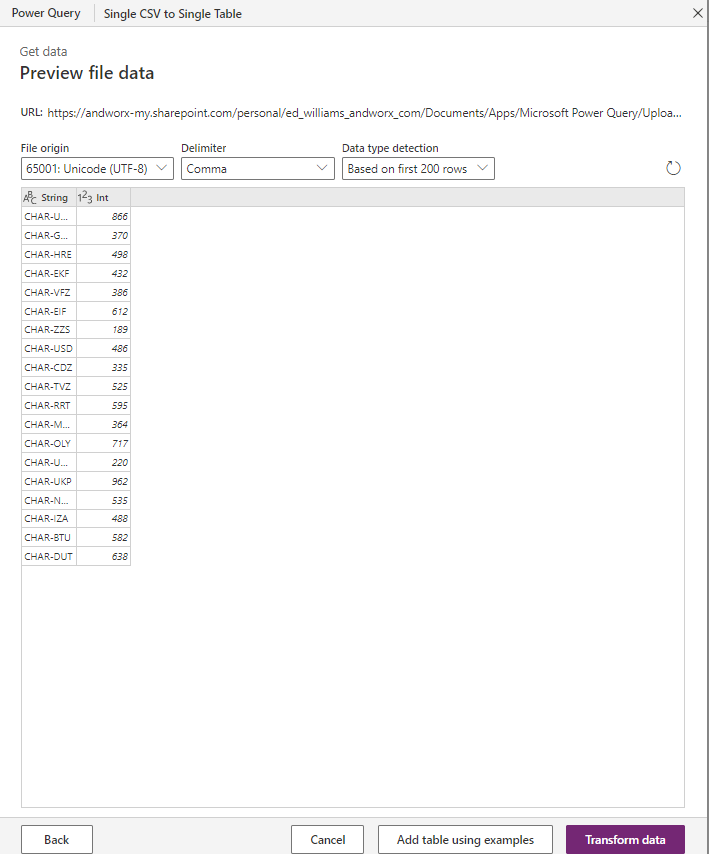

A list of all the items in the SP library selected will be displayed. The one I want is called Examplecsv1.csv. Select Transform to bring in all the data.

Once in Power Query Select Binary from the csv you want to load.

Power Query will then do its best to transform the data into the correct types. Lucky for me Power Query got it all correct. But if it did not, I could massage it as needed. All I have to do is select Next.

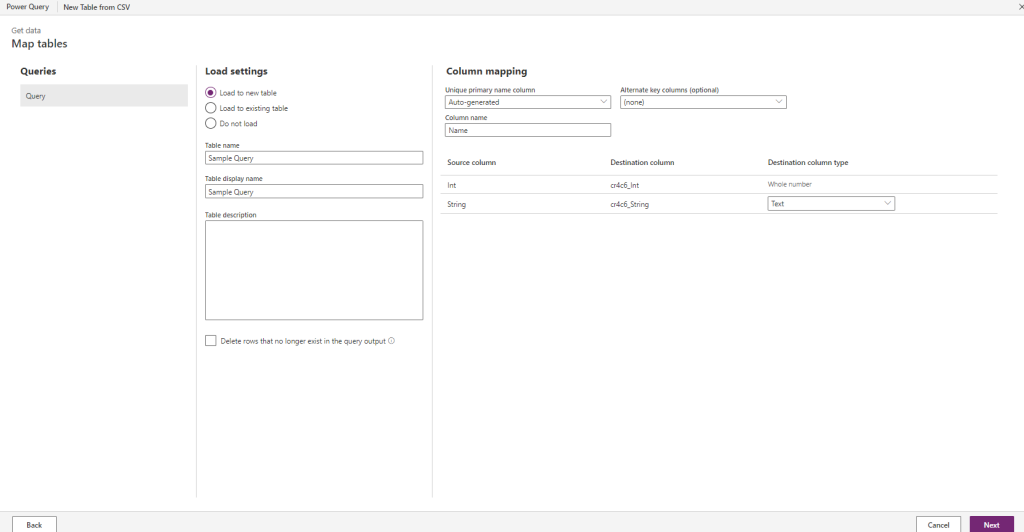

Now I can select Load to new table, set the table name, and build out the columns. With all of it set, hit Next and Publish.

My data is now loaded as expected.

Loading from single CSV to Single Table

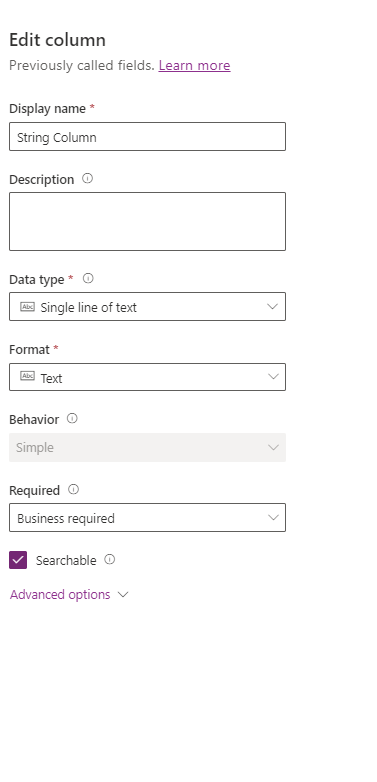

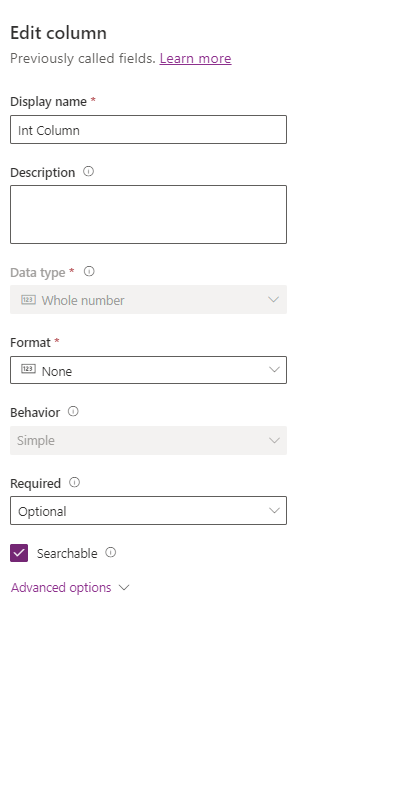

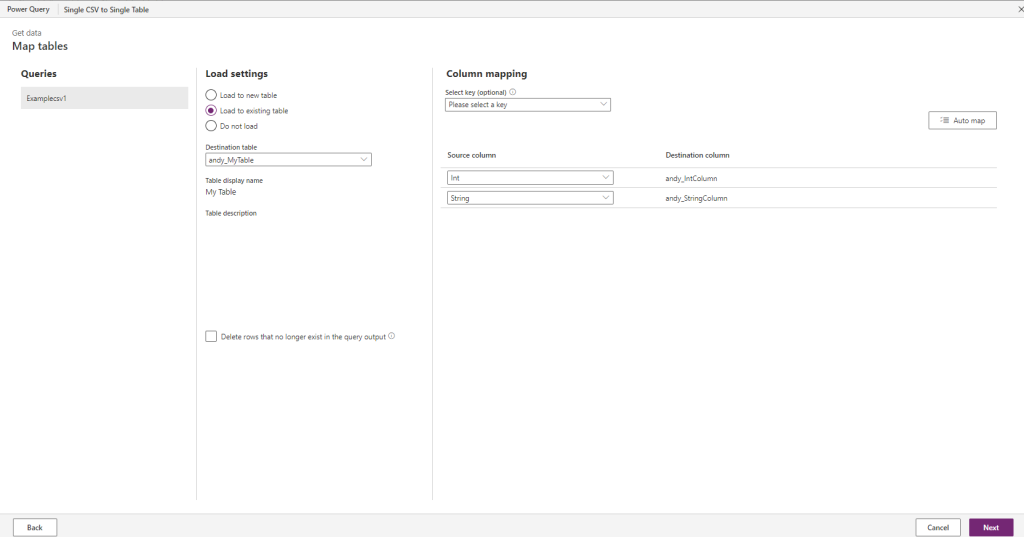

The single csv will load to a table called My Table 1 it will have 2 columns, String Colum, and Int Column

erDiagram

My_Table

My_Table{

string String_Column PK

int Int_Column

}

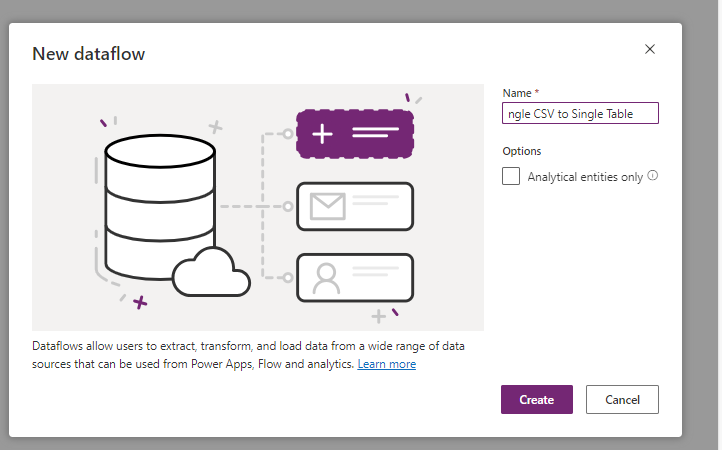

In the Power Apps Maker studio, I have created a new Dataflow called “Single CSV to Single Table”

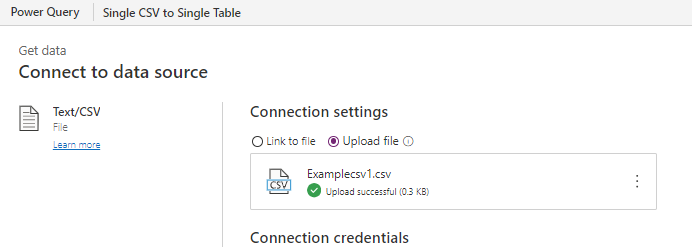

To make this example simple, I am just going to manually upload the csv into the dataflow

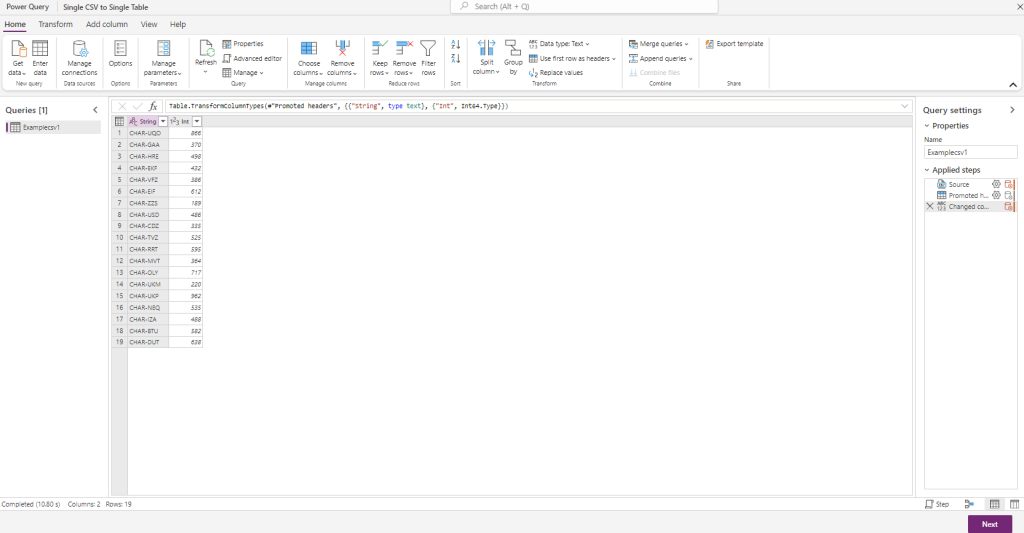

Power Query will now launch giving me a view of the data

As an exercise, I will click on Transform Table, but because it all loaded correctly I really do not need to.

I again see that the data has been brought in correctly and does not require transformations

I have mapped the columns from the csv to the Dataverse table

All I need to do now is select Next and Publish

Loading data into multiple related Dataverse from multiple related csv files

The csv data is as follows

Parent table

| String | Int |

| STRING-1 | 642 |

| STRING-2 | 893 |

| STRING-3 | 600 |

| STRING-4 | 515 |

| STRING-5 | 774 |

| STRING-6 | 442 |

| STRING-7 | 546 |

| STRING-8 | 619 |

| STRING-9 | 522 |

| STRING-10 | 256 |

| STRING-11 | 662 |

| STRING-12 | 384 |

| STRING-13 | 952 |

| STRING-14 | 736 |

| STRING-15 | 244 |

| STRING-16 | 146 |

| STRING-17 | 942 |

| STRING-18 | 462 |

| STRING-19 | 956 |

| STRING-20 | 381 |

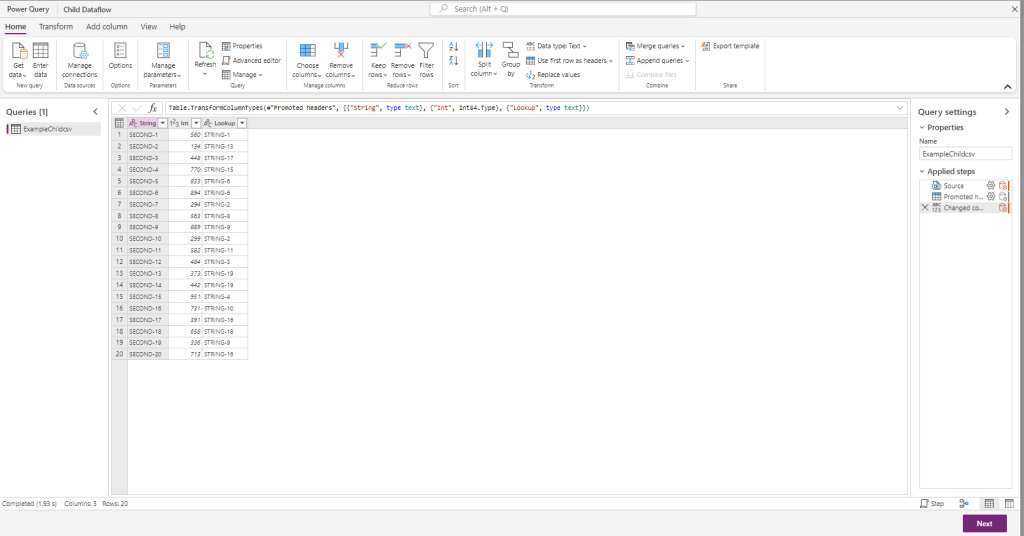

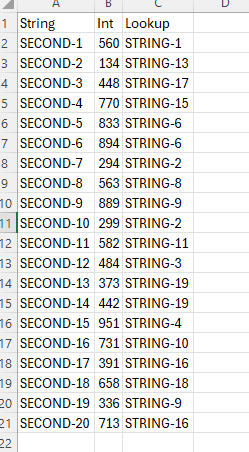

Child table

| String | Int | Lookup |

| SECOND-1 | 560 | STRING-1 |

| SECOND-2 | 134 | STRING-13 |

| SECOND-3 | 448 | STRING-17 |

| SECOND-4 | 770 | STRING-15 |

| SECOND-5 | 833 | STRING-6 |

| SECOND-6 | 894 | STRING-6 |

| SECOND-7 | 294 | STRING-2 |

| SECOND-8 | 563 | STRING-8 |

| SECOND-9 | 889 | STRING-9 |

| SECOND-10 | 299 | STRING-2 |

| SECOND-11 | 582 | STRING-11 |

| SECOND-12 | 484 | STRING-3 |

| SECOND-13 | 373 | STRING-19 |

| SECOND-14 | 442 | STRING-19 |

| SECOND-15 | 951 | STRING-4 |

| SECOND-16 | 731 | STRING-10 |

| SECOND-17 | 391 | STRING-16 |

| SECOND-18 | 658 | STRING-18 |

| SECOND-19 | 336 | STRING-9 |

| SECOND-20 | 713 | STRING-16 |

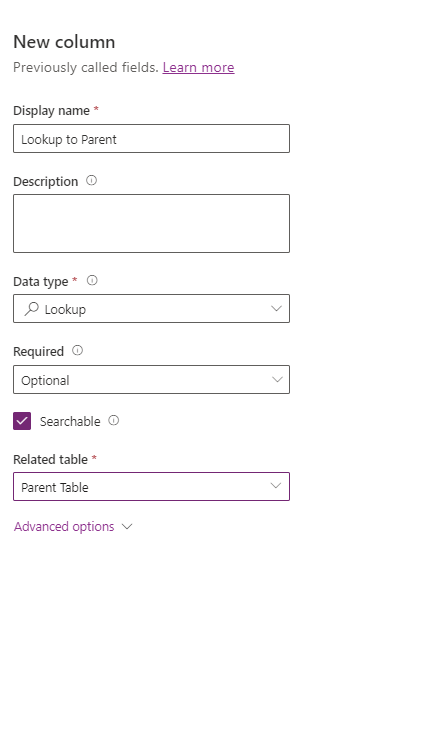

The first step is to create the two Dataverse tables. They will be called Parent and Child tables. In the Child table, I have created a lookup to the child table called “Parent Lookup”

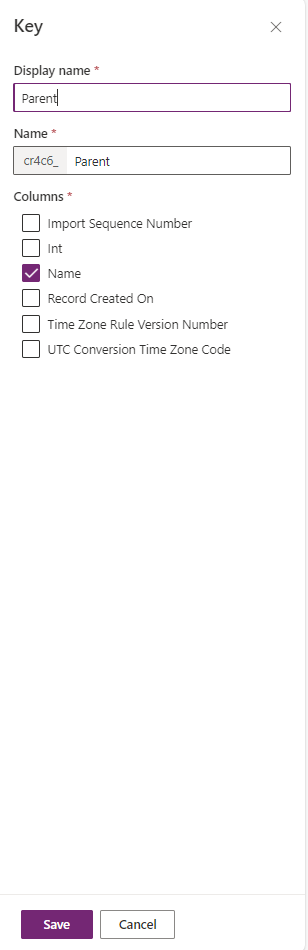

In the Parent table, I have created a key called Parent using the primary name. This will ensure that the data load works for the lookup column

Then, I have loaded the data into the Parent table using the same process as above. Once all that data is loaded, I will create a new dataflow called Child Data flow and upload the csv

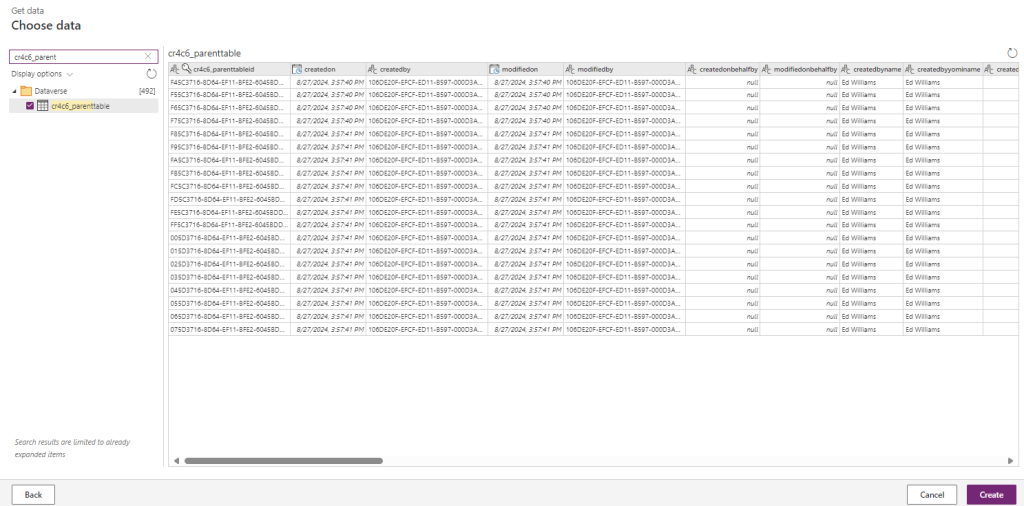

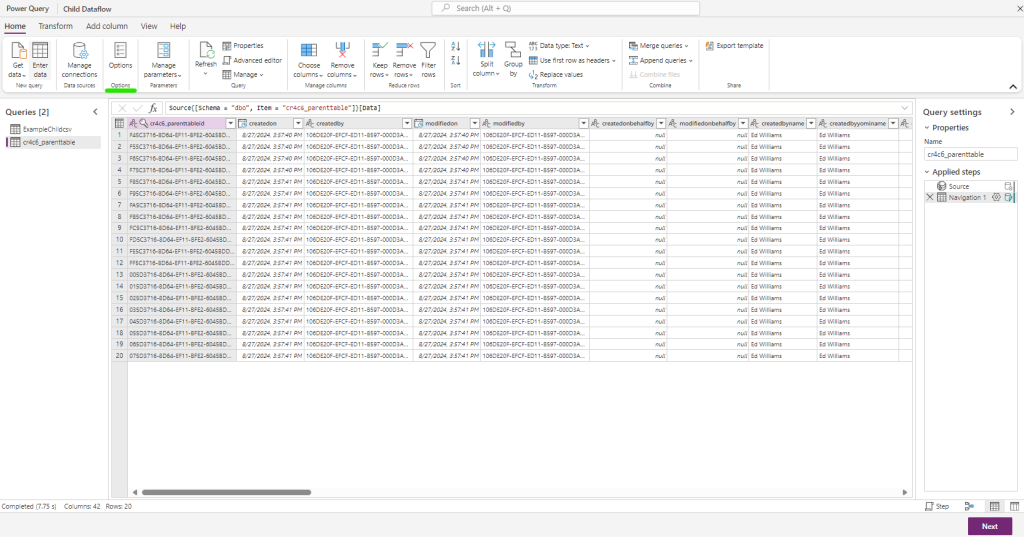

With the data loaded, I will then import in the parent table from Dataverse. Select Get data, then Dataverse. Use the login controls to make a connection to Dataverse and bring in the Parent table

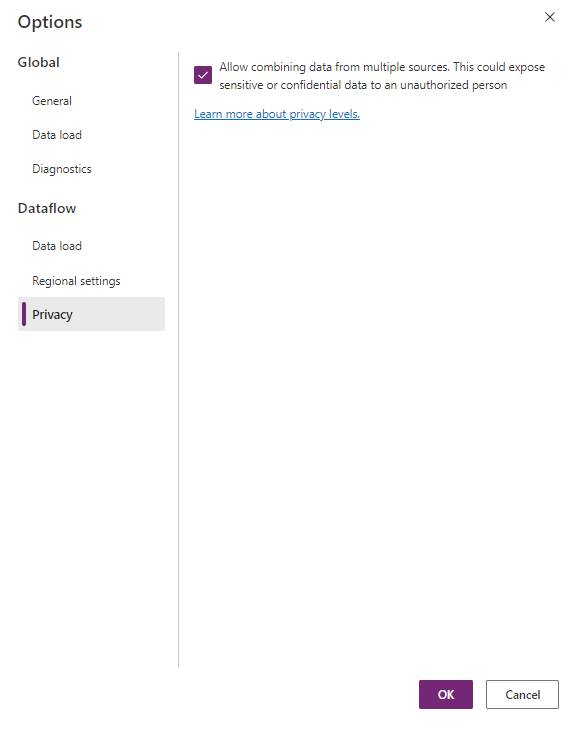

With both datasets loaded, I need to update some security settings, select Options, Privacy and allow combining of data.

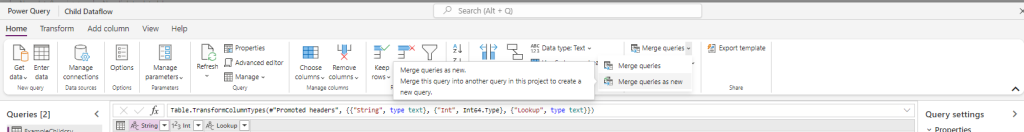

Now select Merge queries as new to join the two tables

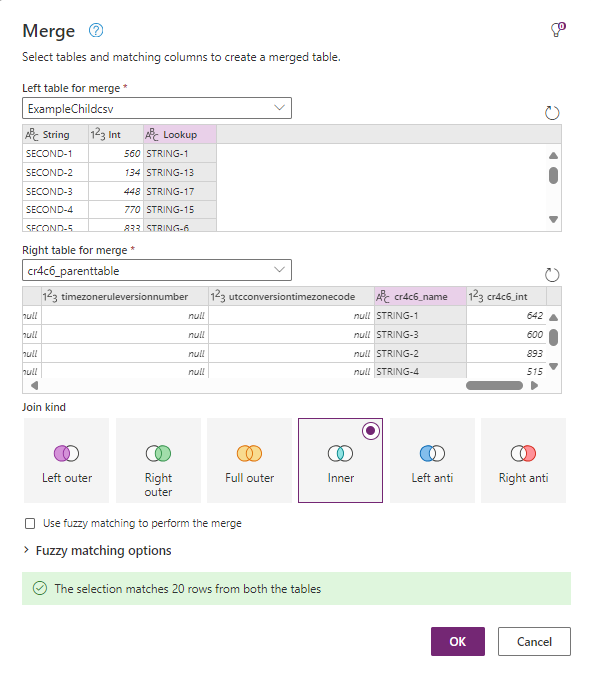

Match the child table with the parent table based on the primary name

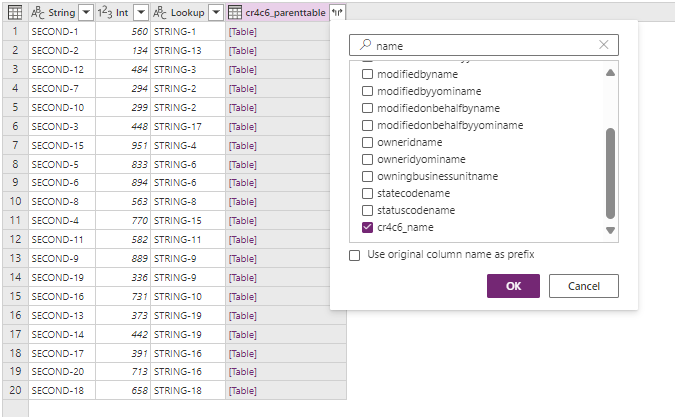

Select the expand columns then select the primary name of the parent table (name for this example).

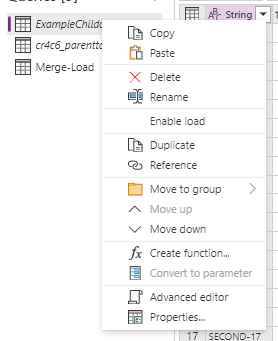

With the merge created, set the other two queries to do not load. Then Select Next

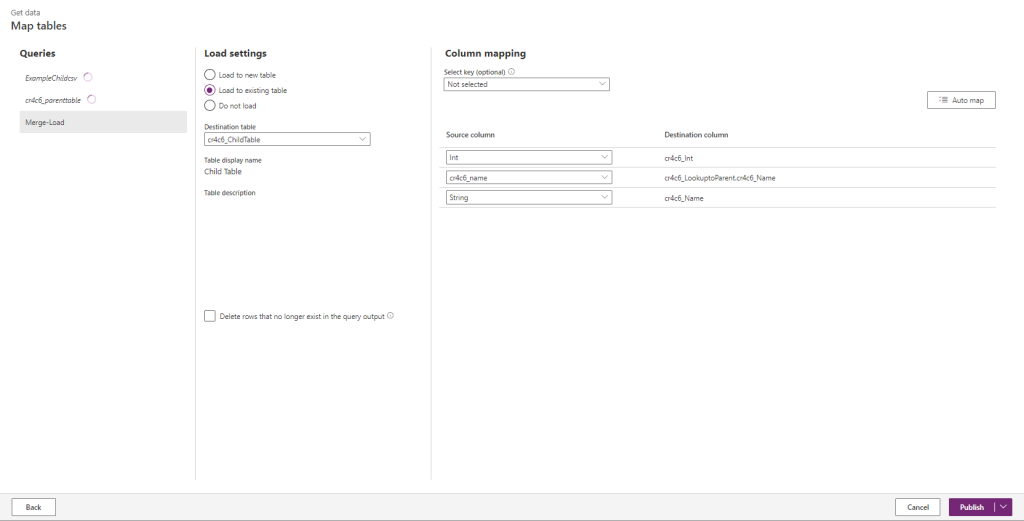

Map the columns to the appropriate one, ensuring that the expanded column above goes to the primary name field of the parent column. Select Publish and watch the data be loaded.

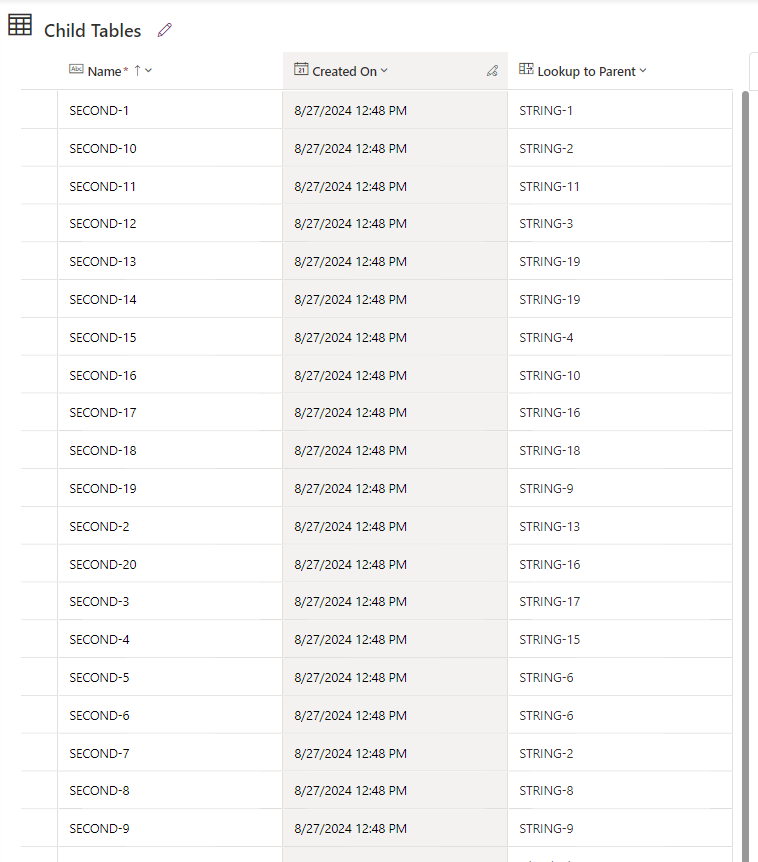

We can now compare the new table with the source data and verify that it all looks as expected.

💡 The parent child tables COULD all be done in one dataflow, but I do not prefer it. I like to ensure my data is going in as expected in chucks

Dataflows are a great tool and with a little planning you can make sure that data gets to the correct place and in the correct format.